How-To Configure the Snowpipe Streaming API Connection

What Does This Article Cover?

Intelligence Hub includes a configurable Connection that allows Intelligence Hub solutions to write data to Snowflake tables. The Snowflake Snowpipe Streaming API Connection supports rapidly writing small payloads of data from Intelligence Hub creating new rows of data in Snowflake tables. This article covers the following topics.

- What is Snowflake Platform?

- What is the Snowpipe Streaming API?

- Design Considerations

- Snowflake Account Information

- Snowflake Key Pair Authorization

- Intelligence Hub Connection Overview Configuration

- Intelligence Hub Connection Output Configuration

What is Snowflake Platform?

Snowflake is a fully managed SaaS (software as a service) relational database for building data warehouses. It’s built on AWS, Azure, and Google cloud platforms and combines the functionalities of traditional databases with a suite of advanced capabilities. Snowflake provides a means for the storage, processing, and analysis of large amounts of data. By using cloud technology, it allows users to perform multiple tasks faster than traditional big data platforms built on conventional databases.

What is Snowpipe Streaming API?

The Snowpipe Streaming API prompts low-latency loads of streaming data rows. The streaming ingest API writes rows of data to Snowflake tables, unlike bulk data loads or Snowpipe, which write data from staged files. This architecture results in lower load latencies, with corresponding lower costs for loading similar volumes of data, which makes it a powerful tool for handling real-time data streams.

Design Considerations:

The typical approach for loading terabytes of data into Snowflake is with files. For example, using AWS S3 and supported file types like .CSV and Parquet. If the requirement is to process a 100MB file for example then the Standard Snowpipe API is the most cost effective approach for loading the data into Snowflake. There is typically a cost efficiency sweet spot for the file size that is between a few 100MBs and 1GB. With the Standard Snowpipe API and a file based approach the minimum latency is approximately 90 seconds. If files are small then the Standard Snowpipe API is less cost effective.

When the data transfer is frequent and would otherwise consist of many small files it is most cost effective to insert rows directly into Snowflake tables using the Snowpipe Streaming API. This is a row-by-row approach and can be more cost effective because less Snowflake compute being used.

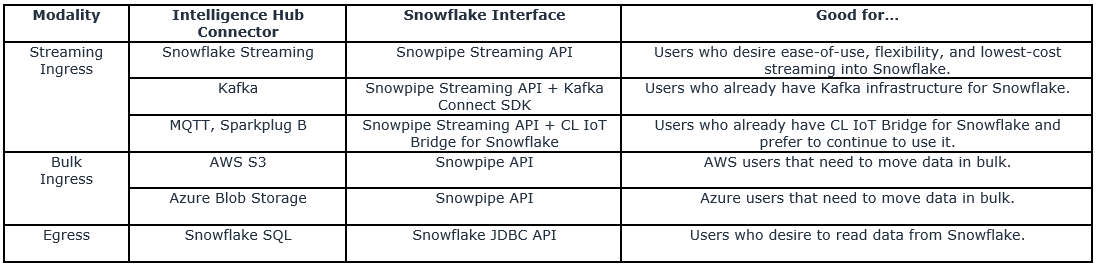

Given these considerations, there are multiple options for exchanging data between Intelligence Hub and Snowflake to align with a variety of use cases. Therefore, it is important to select the proper approach to align with a given use case and solution.

The Intelligence Hub Snowflake SQL Connection can be used to interact with Snowflake tables. This approach is best when the requirements include basic reading and writing to and from Snowflake tables and the latency requirement is not low and large datasets are not being exchanged.

If a large volume of data is being processed by Intelligence Hub like a large amount of historian data then a file based approach might be best. In this scenario, the Intelligence Hub Connections for AWS S3 or Azure Blob Storage could be used to output files. Snowflake services would then obtain data from these files in their respective cloud storage repositories.

If the requirements necessitate a low latency solution consisting of frequently writing relatively small payloads of data to Snowflake tables then the Intelligence Hub options include the Kafka Connection and the Snowpipe Streaming API Connection. If there is already Kafka infrastructure in place then the Kafka Connection might be best, otherwise the Snowpipe Streaming API Connection is recommended as the fastest and easiest option to configure in Intelligence Hub. There are some limitations. The Snowpipe Streaming API Connection is limited to a 16MB payload size and the Connection inserts records into Snowflake but does not perform updates, inserts, or upserts. Finally, semi-structured data like a JSON payload may be written to a Snowflake table column. Snowflake services can unpack, flatten, and manipulate data after it is written to a Snowflake table.

Snowflake Account Information:

In order to configure the Intelligence Hub Snowpipe Streaming API Connection Snowflake account and database information must be obtained. The Connection requires the account identifier, a specific user, and a specific user role.

An account identifier uniquely identifies a Snowflake account within your organization, as well as throughout the global network of Snowflake-supported cloud platforms and cloud regions. The account identifier can be found with account information. In the image below the example account identifier is “ZGB28743”.

A specific user account needs to be selected to authenticate with. In the image below the example user account is “SERVICEACCOUNT”.

In Snowflake a user may be assigned to multiple roles. A role must also be specified for the Connection. Optimally, a role with minimum capabilities so be created. The role must have to have the permission to query for tables, use the Streaming API, and if desired create tables. In the image below the example role is “HIGHBYTE_APP_DEV”.

Information related to the location of the table to be written to or created is also needed. The Intelligence Hub Snowpipe Streaming API Connection requires the Database, Schema, and Warehouse to be entered.

In the image below the example Warehouse name is “COMPUTE_WH”.

The Database and Schema may be found in Snowflake under the Data menu selection. In the image below the example Database is “HIGHBYTE_DB” and the example Schema is “PUBLIC”.

Snowflake Key Pair Authorization:

The Intelligence Hub Snowpipe Streaming API Connection uses a key pair for authentication. The key pair must be created according to the instructions provided in Snowflake documentation. The generated certificate and private key are configured in Intelligence Hub as a Certificate.

The Snowflake instructions for generating the key pair are provided here. https://docs.snowflake.com/en/user-guide/key-pair-auth

The following are the steps for Intelligence Hub.

- Run the following commands to create three files

- Run: openssl genrsa -out privkey.pem 4096

- Run: openssl rsa -in privkey.pem -out pubkey.pem -pubout -outform PEM

- Run: openssl req -new -x509 -key privkey.pem -out cacert.pem -days 1095

- Create the Intelligence Hub certification using the contents of the cert and private key files

- In Snowflake set the user’s public key by running a query using the contents of the public key file

Intelligence Hub Connection Overview Configuration:

The Snowflake Connection connects to Snowflake using the Snowpipe Streaming API. The connection also uses the Snowflake JDBC driver to search for and create tables. The information obtained and configured as described above is entered into Intelligence Hub and used to establish a connection to Snowflake.

Intelligence Hub Connection Output Configuration:

Intelligence Hub Connection Outputs are configured to create and stream data to tables in the Snowflake database. The configuration consists of indicating a table name and whether or not a new table will be created. A JSON payload may be written to a table column.